Clean • Professional

In modern microservices and cloud-native architectures, caching is one of the most powerful techniques to improve performance, reduce database load, and ensure scalability. Distributed systems, however, introduce challenges like data consistency, cache invalidation, and synchronization across multiple nodes.

Understanding caching patterns is essential to design robust, high-performance systems.

Distributed caching stores frequently accessed data in a shared cache layer that multiple application instances can use.

Benefits:

Popular Distributed Caches:

Caching patterns define how applications interact with cache and database. Choosing the right pattern is critical for performance, consistency, and scalability.

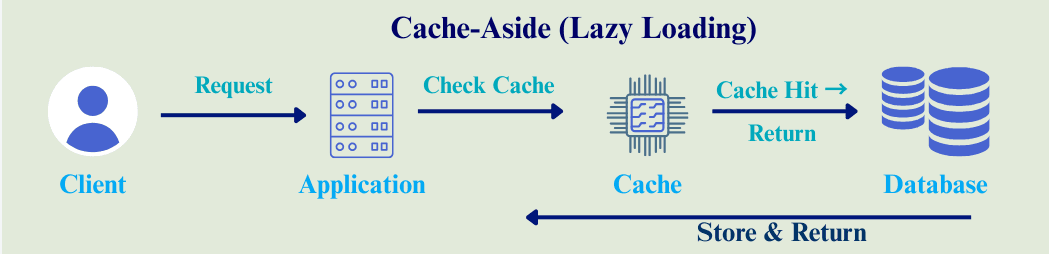

Cache-Aside, also called Lazy Loading, is one of the most popular caching patterns.

Spring Boot Example (Redis Cache):

@Service

public class ProductService {

@Autowired

private ProductRepository repository;

@Cacheable(value = "products", key = "#id")

public Product getProductById(Long id) {

System.out.println("Fetching from database...");

return repository.findById(id).orElse(null);

}

}

How it behaves:

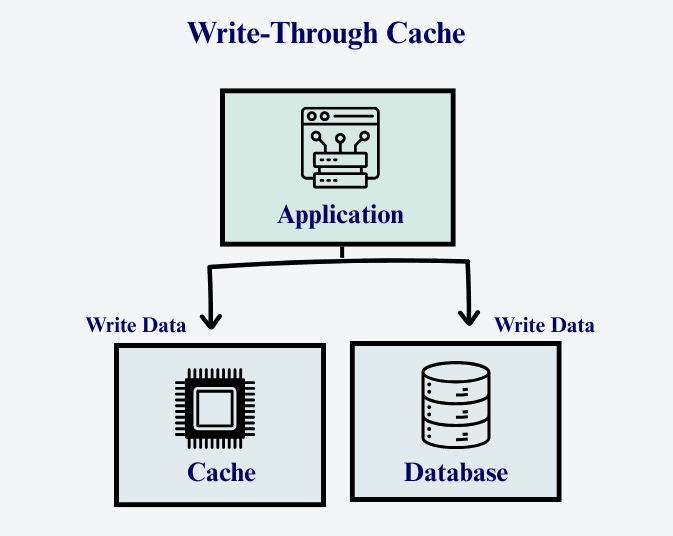

Spring Boot Example:

@CachePut(value = "products", key = "#product.id")

public Product saveProduct(Product product) {

return repository.save(product);

}

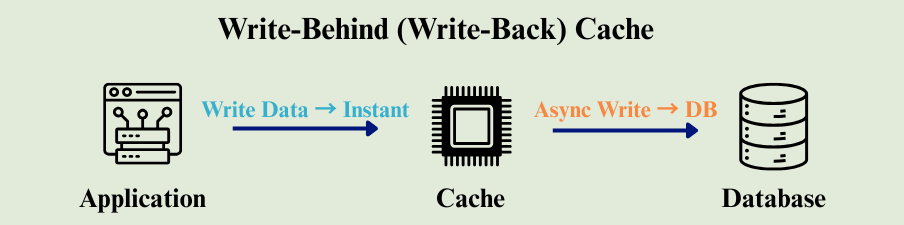

Write-Behind, also called Write-Back caching, is designed for high-performance write operations.

Spring Boot Example:

@CachePut(value = "products", key = "#product.id")

public Product saveProduct(Product product) {

return repository.save(product);

}

How it behaves:

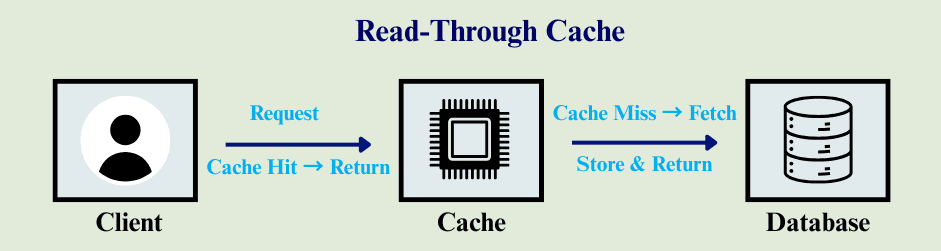

Read-Through caching ensures the application always reads from the cache.

Spring Boot Example (Redis Cache):

@Configuration

public class RedisCacheConfig {

@Bean

public CacheManager cacheManager(RedisConnectionFactory factory) {

return RedisCacheManager.builder(factory)

.cacheDefaults(RedisCacheConfiguration.defaultCacheConfig()

.entryTtl(Duration.ofMinutes(10)))

.build();

}

}

How it behaves:

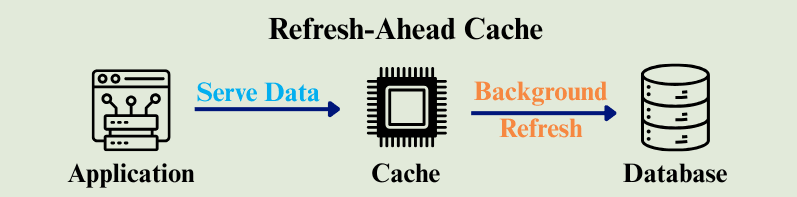

Refresh-Ahead caching preemptively refreshes data in the cache before it expires.

How it behaves:

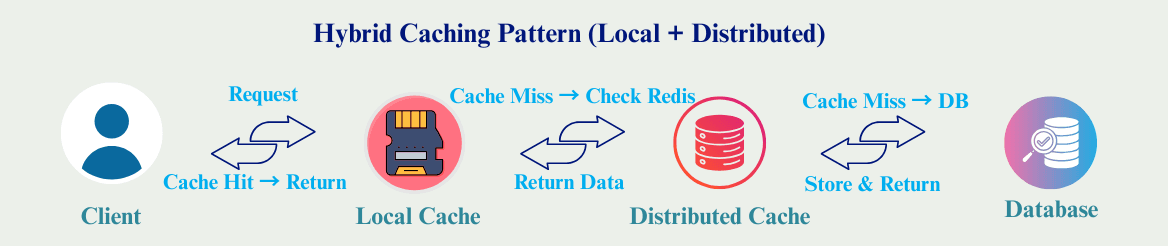

Hybrid caching combines ultra-fast local cache with a shared distributed cache to get the best of both worlds.

How it behaves:

Client Request → CaffeineCache → RedisCache → Database

Benefits:

| Use Case | Pattern | Why Caching Helps |

|---|---|---|

| Product Catalog | Cache-Aside | Reduces DB queries for frequently viewed items |

| User Session | Write-Through | Keeps cache and DB in sync for login info |

| Leaderboards | Refresh-Ahead | Ensures updated rankings without cache misses |

| API Rate Limiting | Write-Behind | Updates counters asynchronously to DB |

| Configuration Data | Local + Distributed | Fast access via local cache, shared via Redis |

Caching is a cornerstone of performance optimization in distributed systems. By choosing the right pattern—Cache-Aside, Write-Through, Write-Behind, or Read-Through—you can achieve a balance between performance, consistency, and scalability.

For modern microservices, hybrid caching combining local and distributed layers often provides the best of both worlds: ultra-fast local reads and consistent, shared distributed caching.